Explore The New Features of OpenAI’s GPT-4o

OpenAI released GPT-4o on May 13, an updated version that can handle text, audio, and video and offers real-time response and a variety of expressive voice options. Making it a significantly more powerful model than what was available earlier.

What is GPT-4o?

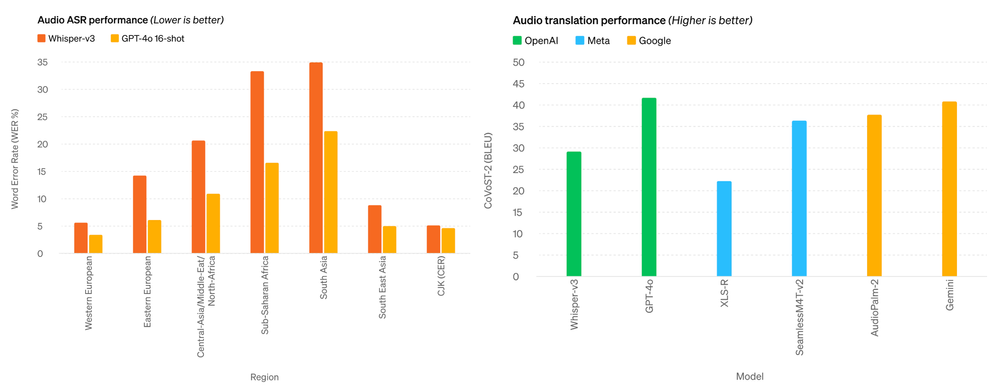

The o in GPT-4o represents omni. It is the key model in OpenAI’s LLM technology portfolio. The new model enables ChatGPT to handle 50 different languages with enhanced speed and quality. It will also be available via OpenAI’s API, allowing developers to start creating apps using the new model right now, Murati added. She emphasized that the GPT-4o is twice as quick as GPT-4 Turbo while costing half as much.

This isn’t GPT-4’s first upgrade; the model received a boost in November 2023 with the release of GPT-4 Turbo.

New Features of GPT-4o

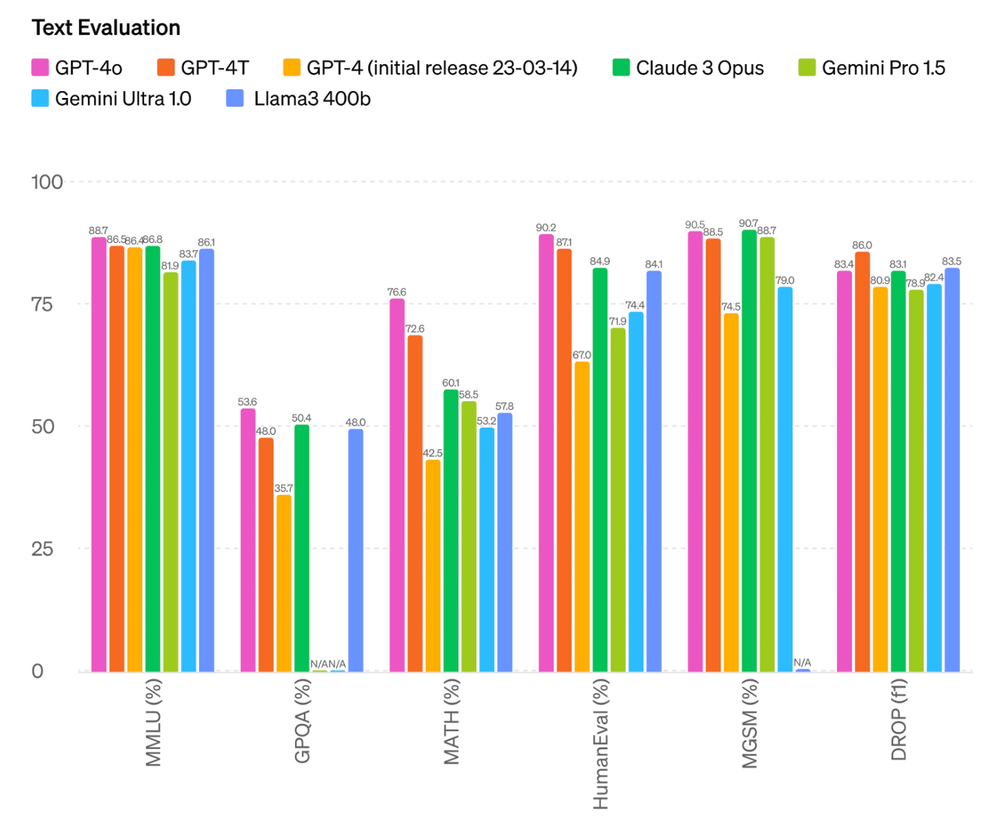

GPT-4o was the most competent OpenAI model at the time of its arrival, both functionally and performance-wise.

The new version can do the following tasks:

- Real-time interactions

You can have real time conversations with GPT-4o model without any delays. OpenAI claimed that the new model can answer a user’s audio “in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time in a conversation.”

- Memory and context awareness

It can recall prior interactions and maintain context over lengthy chats. With a context window that can support up to 128,000 tokens. It can retain coherence throughout extended conversations or documents, making it ideal for comprehensive study.

- Multimodal reasoning and generation

GPT-4o combines text, speech, and vision into one structure, allowing it to process and respond to a variety of data formats. It can also generate answers using voice, graphics, and text.

- Language and audio processing.

It is multilingual and can process over 50 different foreign languages.

- Voice variations

Can create speech with emotional details. This makes it ideal for applications that need sensitive communication.

- Audio content analysis

The model can produce and interpret spoken language, which is useful in voice-activated devices, audio content analysis, and interactive storytelling.

- Real-time translation

The multimodal features of GPT-4o enable real-time translation from one language to another.

- Analyzing images

This model can analyze photos and videos, allowing users to upload visual material to interpret, explain, and analyze.

- Data analysis

Users can use their vision and reasoning talents to interpret the data presented in data charts. It can also generate data charts based on analysis or prompts.

- File uploads

It allows file uploads to evaluate particular data for analysis beyond the knowledge cutoff. The algorithm interprets user emotion in various text, audio, and video formats.

- Customized GPTs

Organizations can build customized GPT-4o versions based on particular business needs or departments. The customized model may soon be made available to customers through OpenAI’s GPT Store.

- Summarizes and generates text

Like all previous models, it can do ordinary LLM tasks like text summarization and creation.

GPT-4 vs. GPT-4 Turbo vs. GPT-4o

Let us differentiate between GPT-4, GPT-4 Turbo and GPT-4o

| Feature/Model | GPT-4 | GPT-4 Turbo | GPT-4o |

| Release Date | March 14, 2023 | November 2023 | May 13, 2024 |

| Context Window | 8,192 tokens | 128,000 tokens | 128,000 tokens |

| Knowledge Cutoff | September 2021 | April 2023 | October 2023 |

| Input Modalities | Text, limited image handling | Text and images (enhanced) | Text, images, and audio (full multimodal capabilities) |

| Vision Capabilities | Basic | Enhanced, includes image generation via DALL-E 3 | Advanced vision and audio capabilities |

| Multimodal Capabilities | Limited | Enhanced image and text processing | Full integration of text, image and audio |

| Cost | Standard | Input tokens are three times cheaper than GPT-4. | 50% cheaper than GPT-4 Turbo |

Conclusion:

The new version of GPT-4o is twice as fast, 50% cheaper, has a 5x rate limit, a 128K context window, and a single multimodal model. Each new feature is a promising advancement for those developing AI applications.

GPT-4o’s faster speed and image/video inputs make it perfect to use in a computer vision workflow alongside customized, fine-tuned systems and pre-trained free models to construct business applications.

Read Our blog: SEO vs. SEM: Which One Is Better?